Risueno-Segovia C, Dohmen D, Gultekin YB, Pomberger T, Hage SR (2023) Linguistic law-like compression strategies emerge to maximize coding efficiency in marmoset vocal communication. Proceedings of the Royal Society B, 290, 20231503. Human language follows statistical regularities or linguistic laws. For instance, Zipf’s law of brevity states that the more frequently a word is used, the shorter it tends to be. All human languages adhere to this word structure. However, it is unclear whether Zipf’s law emerged de novo in humans or whether it also exists in the non-linguistic vocal systems of our primate ancestors. Using a vocal conditioning paradigm, we examined the capacity of marmoset monkeys to efficiently encode vocalizations. We observed that marmosets adopted vocal compression strategies at three levels: (i) increasing call rate, (ii) decreasing call duration and (iii) increasing the proportion of short calls. Our results demonstrate that marmosets, when able to freely choose what to vocalize, exhibit vocal statistical regularities consistent with Zipf’s law of brevity that go beyond their context-specific natural vocal behaviour. This suggests that linguistic laws emerged in non-linguistic vocal systems in the primate lineage.

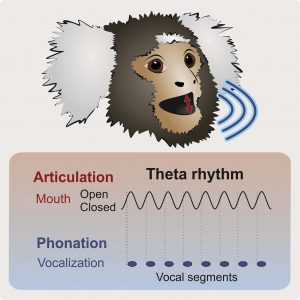

Risueno-Segovia C, Hage SR (2020) Theta synchronization of phonatory and articulatory systems in marmoset monkey vocal production. Current Biology, Epub ahead of print: doi.org/10.1016/j.cub.2020.08.019 Human speech shares a 3–8-Hz theta rhythmacross all languages. According to the frame/content theory of speech evolution, this rhythm corresponds to syllabic rates derived from natural mandibular-associated oscillations. The underlying pattern originates from oscillatory movements of articulatory muscles tightly linked to periodic vocal fold vibrations. Such phono-articulatory rhythms have been proposed as one of the crucial preadaptations for human speech evolution. However, the evolutionary link in phono-articulatory rhythmicity between vertebrate vocalization and human speech remains unclear. From the phonatory perspective, theta oscillations might be phylogenetically preserved throughout all vertebrate clades. Fromthe articulatory perspective, theta oscillations are present in non-vocal lip smacking, teeth chattering, vocal lip smacking, and clicks and faux-speech in non-human primates, potential evolutionary precursors for speech rhythmicity. Notably, a universal phono-articulatory rhythmicity similar to that in human speech is considered to be absent in non-human primate vocalizations, typically produced with sound modulations lacking concomitant articulatory movements. Here, we challenge this view by investigating the coupling of phonatory and articulatory systems in marmoset vocalizations. Using quantitative measures of acoustic call structure, e.g., amplitude envelope, and call-associated articulatory movements, i.e., inter-lip distance, we show that marmosets display speech-like bi-motor rhythmicity. These oscillations are synchronized and phase locked at theta rhythms. Our findings suggest that oscillatory rhythms underlying speech production evolved early in the primate lineage, identifying marmosets as a suitable animal model to decipher the evolutionary and neural basis of coupled phono-articulatory movements.

Hage SR (2020) The role of auditory feedback on vocal pattern generation in marmoset monkeys. Marmoset monkeys are known for their rich vocal repertoire. However, the underlying call production mechanisms remain unclear. By showing that marmoset moneys are capable of interrupting and modulating ongoing vocalizations, recent studies in marmoset monkeys challenged the decades-old

Current Opinion in Neurobiology 60, 92-98

concepts of primate vocal pattern generation that suggested that monkey calls consist of one discrete call pattern. The current article will present a revised version of the brainstem vocal pattern-generating network in marmoset monkeys that is capable of responding to perturbing auditory stimuli with rapid modulatory changes of the acoustic call structure during ongoing calls. These audio-vocal integration processes might potentially happen at both the cortical and subcortical brain level.

Pomberger T*, Risueno-Segovia C*, Gultekin YB*, Dohmen D*, Hage SR (2019) Cognitive control of complex motor behavior in marmoset monkeys. Nature Communications 10, 3796 (*authors contribute equally) Marmosets have attracted significant interest in the life sciences. Similarities with humanbrain anatomy and physiology, such as the granular frontal cortex, as well as the developmentof transgenic lines and potential for transferring rodent neuroscientific techniques to smallprimates make them a promising neurodegenerative and neuropsychiatric model system.However, whether marmosets can exhibit complex motor tasks in highly controlled experi-mental designs—one of the prerequisites for investigating higher-order control mechanismsunderlying cognitive motor behavior—has not been demonstrated. We show that marmosetscan be trained to perform vocal behavior in response to arbitrary visual cues in controlledoperant conditioning tasks. Our results emphasize the marmoset as a suitable model to studycomplex motor behavior and the evolution of cognitive control underlying speech.![]()

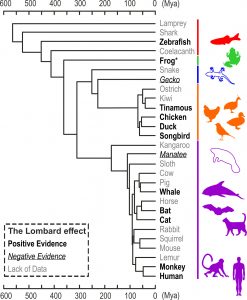

Luo J, Hage SR, Moss CF (2018) The Lombard effect: from acoustics to neural mechanisms. Trends in Neurosciences, online ahead of print: doi.org/10.1016/j.tins.2018.07.011. Understanding the neural underpinnings of vocal–motor control in humans and other animals remains a major challenge in neurobiology. The Lombard effect – a rise incall amplitude in response to background noise – hasbeen demonstratedin a wide range of vertebrates. Here, we review both behavioral and neurophysiological data and propose that the Lombard effect is driven by a subcortical neural network, which can be modulated by cortical processes. The proposed framework offers mechanistic explanations for two fundamental features of the Lombard effect: its widespread taxonomic distribution across the vertebrate phylogenetic tree and the widely observed variations in compensation magnitude. We highlight the Lombard effect as a model behavioral paradigm for unraveling some of the neural underpinnings of audiovocal integration.

Hage SR (2018) Auditory and audio-vocal responses of single neurons in the monkey ventral premotor cortex. Hearing Research 366, 82-89. Monkey vocalization is a complex behavioral pattern, which is flexibly used in audio-vocal communication. A recently proposed dual neural network model suggests that cognitive control might be involved in this behavior, originating from a frontal cortical network in the prefrontal cortex and mediated via projections from the rostral portion of the ventral premotor cortex (PMvr) and motor cortex to the primary vocal motor network in the brainstem. For the rapid adjustment of vocal output to external acoustic events, strong interconnections between vocal motor and auditory sites are needed, which are present at cortical and subcortical levels. However, the role of the PMvr in audio-vocal integration processes remains unclear. In the present study, single neurons in the PMvr were recorded in rhesus monkeys (Macaca mulatta) while volitionally producing vocalizations in a visual detection task or passively listening to monkey vocalizations. Ten percent of randomly selected neurons in the PMvr modulated their discharge rate in response to acoustic stimulation with species-specific calls. More than four-fifths of these auditory neurons showed an additional modulation of their discharge rates either before and/or during the monkeys‘ motor production of the vocalization. Based on these audio-vocal interactions, the PMvr might be well positioned to mediate higher order auditory processing with cognitive control of the vocal motor output to the primary vocal motor network. Such audio-vocal integration processes in the premotor cortex might constitute a precursor for the evolution of complex learned audio-vocal integration systems, ultimately giving rise to human speech.

Gultekin YB, Hage SR (2018) Limiting parental interaction during vocal development affects acoustic call structure in marmoset monkeys. Science Advances 4, eaar4012. Human vocal development is dependent on learning by imitation through social feedback between infants and caregivers. Recent studies have revealed that vocal development is also influenced by parental feedback in marmoset monkeys, suggesting vocal learning mechanisms in nonhuman primates. Marmoset infants that experience more contingent vocal feedback than their littermates develop vocalizations more rapidly, and infant marmosets with limited parental interaction exhibit immature vocal behavior beyond infancy. However, it is yet unclear whether direct parental interaction is an obligate requirement for proper vocal development because all monkeys in the aforementioned studies were able to produce the adult call repertoire after infancy. Using quantitative measures to compare distinct call parameters and vocal sequence structure, we show that social interaction has a direct impact not only on the maturation of the vocal behavior but also on acoustic call structures during vocal development. Monkeys with limited parental interaction during development show systematic differences in call entropy, a measure for maturity, compared with their normally raised siblings. In addition, different call types were occasionally uttered in motif-like sequences similar to those exhibited by vocal learners, such as birds and humans, in early vocal development. These results indicate that a lack of parental interaction leads to long-term disturbances in the acoustic structure of marmoset vocalizations, suggesting an imperative role for social interaction in proper primate vocal development.

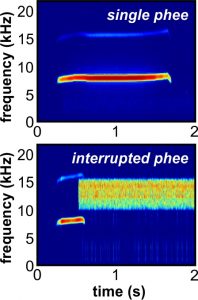

Pomberger T, Risueno-Segovia C, Löschner J, Hage SR Investigating the evolution of human speech is difficult and controversial because human speech surpasses nonhuman primate vocal communication in scope and flexibility [1–3]. Monkey vocalizations have been assumed to be largely innate, highly affective, and stereotyped for over 50 years [4, 5]. Recently, this perception has dramatically changed. Current studies have revealed distinct learning mechanisms during vocal development [6–8] and vocal flexibility, allowing monkeys to cognitively control when [9, 10], where [11], and what to vocalize [10, 12, 13]. However, specific call features (e.g., duration, frequency) remain surprisingly robust and stable in adult monkeys, resulting in rather stereotyped and discrete call patterns [14]. Additionally, monkeys seem to be unable to modulate their acoustic call structure under reinforced conditions beyond natural constraints [15, 16]. Behavioral experiments have shown that monkeys can stop sequences of calls immediately after acoustic perturbation but cannot interrupt ongoing vocalizations, suggesting that calls consist of single impartible pulses [17, 18]. Using acoustic perturbation triggered by the vocal behavior itself and quantitative measures of resulting vocal adjustments, we show that marmoset monkeys are capable of producing calls with durations beyond the natural boundaries of their repertoire by interrupting ongoing vocalizations rapidly after perturbation onset. Our results indicate that marmosets are capable of interrupting vocalizations only at periodic time points throughout calls, further supported by the occurrence of periodically segmented phees. These ideas overturn decades-old concepts on primate vocal pattern generation, indicating that vocalizations do not consist of one discrete call pattern but are built of many sequentially uttered units, like human speech.

Precise motor control enables rapid flexibility in vocal behavior of marmoset monkeys.

Current Biology 28, 788-794.

Gultekin YB, Hage SR Vocalizations of human infants undergo dramatic changes across the first year by becoming increasingly mature and speech-like. Human vocal development is partially dependent on learning by imitation through social feedback between infants and caregivers. Recent studies revealed similar developmental processes being influenced by parental feedback in marmoset monkeys for apparently innate vocalizations. Marmosets produce infant-specific vocalizations that disappear after the first postnatal months. However, it is yet unclear whether parental feedback is an obligate requirement for proper vocal development. Using quantitative measures to compare call parameters and vocal sequence structure we show that, in contrast to normally raised marmosets, marmosets that were separated from parents after the third postnatal month still produced infant-specific vocal behaviour at subadult stages. These findings suggest a significant role of social feedback on primate vocal development until the subadult stages and further show that marmoset monkeys are a compelling model system for early human vocal development.

Limiting parental feedback disrupts vocal development in marmoset monkeys.

Nature Communications 8, 14046. ![]()

Hage SR, Nieder A Explaining the evolution of speech and language poses one of the biggest challenges in biology. We propose a dual network model that posits a volitional articulatory motor network (VAMN) originating in the prefrontal cortex (PFC; including Broca’s area) that cognitively controls vocal output of a phylogenetically conserved primary vocal motor network (PVMN) situated in subcortical structures. By comparing the connections between these two systems in human and nonhuman primate brains, we identify crucial biological preadaptations in monkeys for the emergence of a language system in humans. This model of language evolution explains the exclusiveness of non-verbal communication sounds (e.g., cries) in infants with an immature PFC, as well as the observed emergence of non-linguistic vocalizations in adults after frontal lobe pathologies.

Dual neural network model for the evolution of speech and language.

Trends in Neurosciences 39, 813-829. ![]() Feature Review

Feature Review

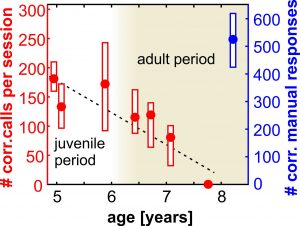

Hage SR, Gavrilov N, Nieder A featured in Inside JEB

Developmental changes of cognitive vocal control in monkeys.

Journal of Experimental Biology 219, 1744-1749. ![]()

featured in Nature Research Highlights